What is Microsoft Security Copilot?

Microsoft Security Copilot is an AI-enabled cybersecurity solution that processes signals at machine speed and scale of AI and assesses risk exposure within minutes, helping security professionals respond swiftly to cyber threats.

Copilot for Security leverages GPT-4, which is one of the most advanced large language models (LLMs) developed by OpenAI along with Microsoft’s security-specific model with threat intelligence that includes over 78 trillion daily security signals. And in fact, it is protected with the power of comprehensive enterprise compliance and security controls.

Microsoft Security Copilot provides a coaching system for junior analysts to help them improve their skills. At the same time, security experts have entry to a generative AI assistant that is always available to assist them. This allows your team to focus on innovation, creativity, and strategic work.

By integrating Microsoft Copilot for security into your team, you can transform the way your organisation is protected with compliance and security controls.

How does Microsoft Copilot for Security work?

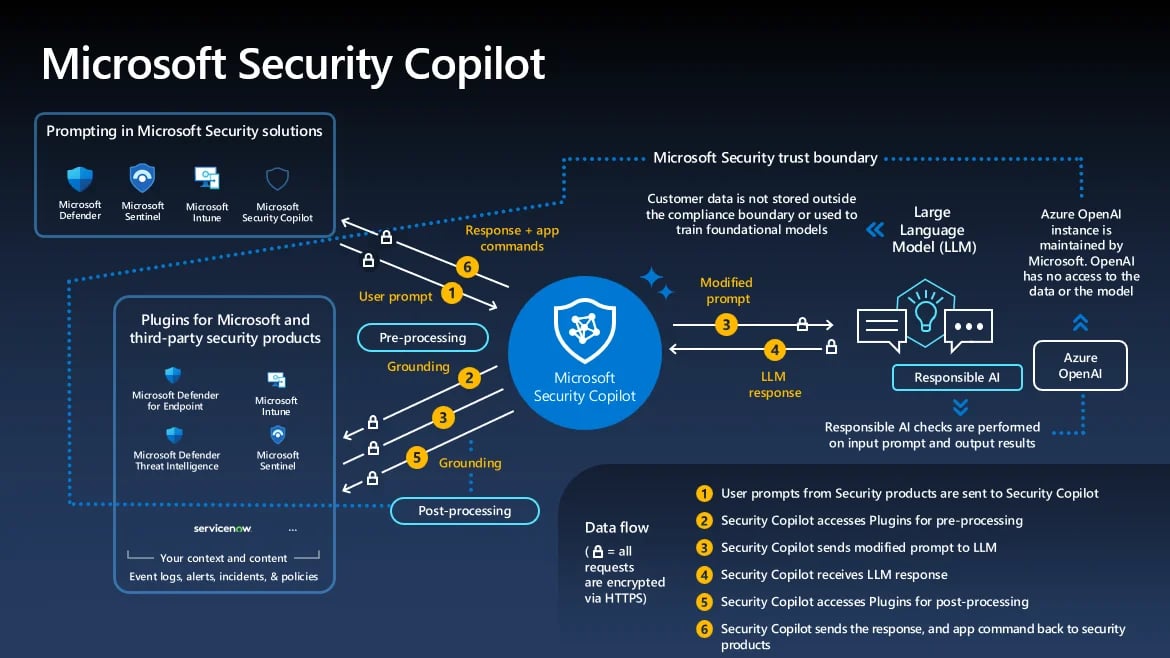

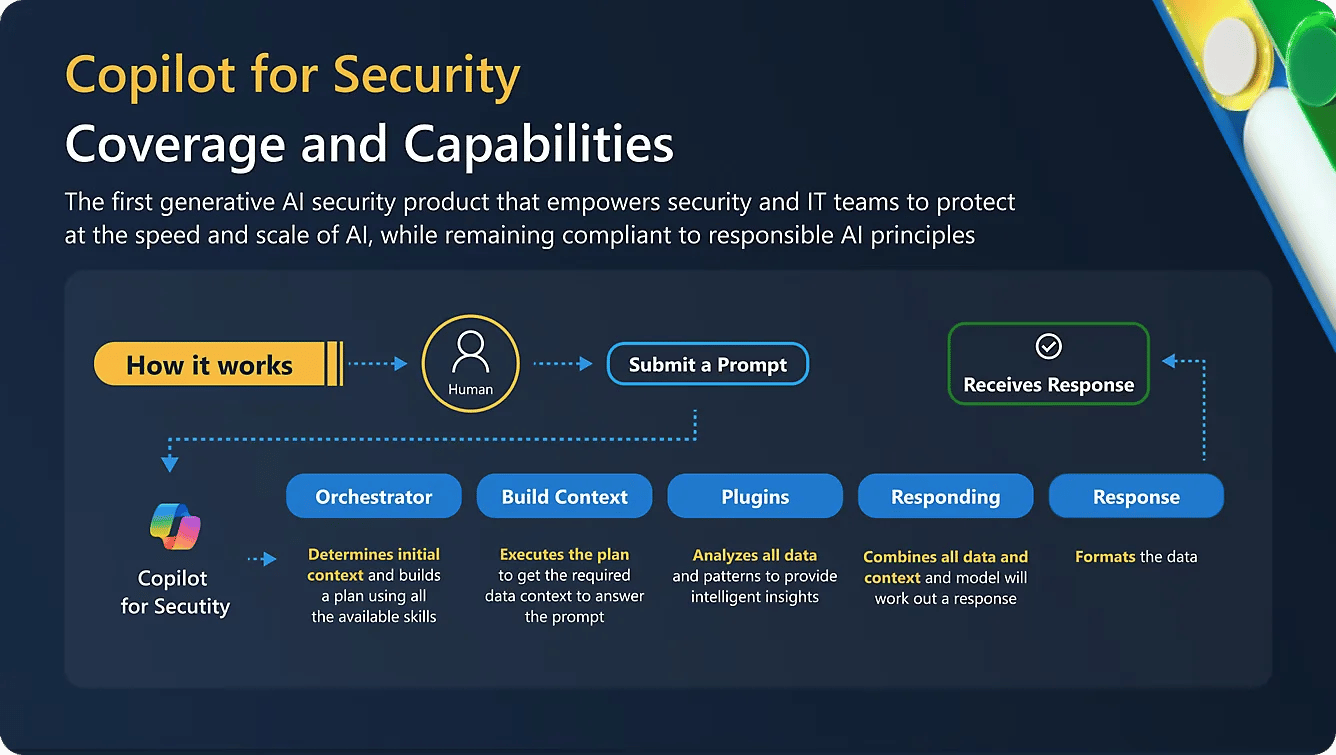

The user enters a question or command into the prompt.

The Copilot for security enhances the user’s plugin with its security-specific skills, which have a unique global threat intelligence and an ever-growing set of cyber skills. This system is based on deep Microsoft security knowledge and constantly learns and adapts to security operations.

Then, using techniques such as fine-tuning, the Copilot further augments an analyst’s work. It also grounds the prompt with up-to-date threat intelligence, informed by Microsoft’s 78 trillion signals and human intelligence.

The Copilot for security connects directly to insights and the end-to-end Microsoft security products, which helps strengthen data and reduce errors, completing the learning loop.

Finally, Copilot for security translates the response according to your prompt instructions, using Microsoft’s sophisticated system and data proficiency to move at the speed and scale of AI. This can take the form of text, code, or a visual that helps analysts see the full context of an incident, its impact, and the next steps to take to deepen the understanding or act directly for remediation and defence hardening.